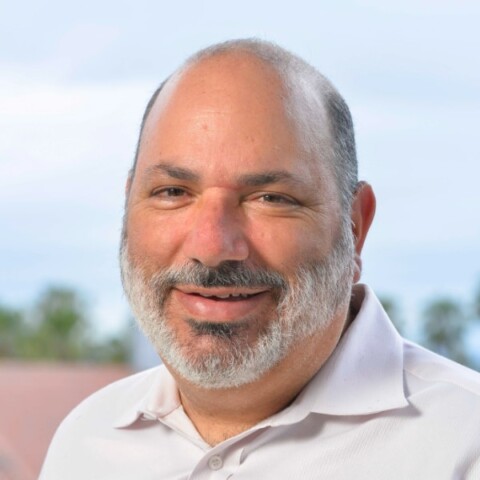

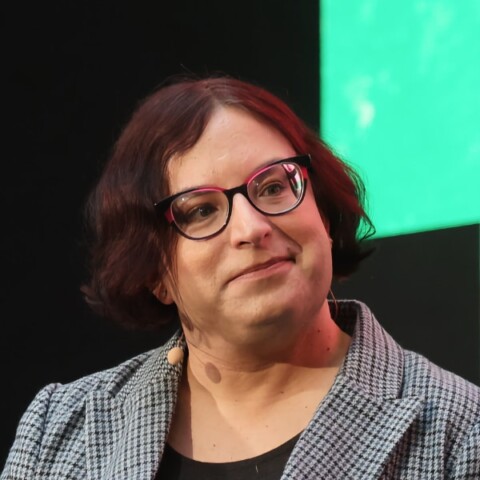

Traditional user-centered design isn’t sufficient for AI systems, as they become ubiquitous in our everyday lives, computer scientist James Landay, Co-Director of the Stanford Institute for Human-centered Artificial Intelligence (HAI), argues in his insightful DLD25 conversation with Axios journalist Ina Fried.

“To me, it’s shocking how rarely we’re still talking about the role of humans in AI”, Ina Fried observes, noting the importance of Landay’s work on human-computer interaction.

Artificial intelligence has the potential to do a lot of good, Landay makes clear – assuming the right guardrails are in place. “It’s going to change our health. It’s going to change our education. It’s going to change government”, he says. “But we also need to make sure that we guide it in a positive way, because it won’t end up being good by itself.”

Landay advocates for “community-centered design” that considers all stakeholders affected by AI tools, not just direct users – especially considering that artificial intelligence “can start to have societal-level effects”, for example when the algorithms influence user behavior on social media platforms.

Watch the video to learn why human-centered AI requires diverse teams beyond technical experts; the challenge of bias inherent in training data; and the need for embedding ethicists in product teams early in the development cycle.